For local calls via gRPC, we have better choices for transports than TCP/IP: Unix domain socket and Named pipe.

Why you should consider UDS and named pipe?

Both Unix domain socket and Named pipe (AKA fifos) offer better performance than TCP/IP because they can avoid the networking overhead. In our scenario, we have a variable number of worker processes in a single server. Managing ports and firewalls is a nightmare. Instead, UDS uses file paths instead of IP addresses and port numbers, which can simplify configuration and setup. Same for named pipes.

Benchmark

The setup is quite similar to Compare the performance of HTTP/1.1, HTTP/2 and HTTP/3 except that we are testing a gRPC service here instead of a web service.

Server

Server code is based on the template generated by dotnet new grpc -o GrpcGreeter with the 2 changes below:

- Besides the default TCP/IP mode, I add Unix domain socket and Named pipe. The server will listen to the 3 protocols at the same time.

- Add a new rpc method

Downloadthat returns requested number of bytes (varying from 10 bytes to 1 MB) to reveal the real world scenario

Client

Client is a wrk like benchmark tool for gRPC written in c#

Key parameters:

- 3 runs: the final result shown below is the median of 3 runs

- in each run, we request data with different sizes, varying from 10 bytes to 1 mb

- for each size, the test is running for 20 seconds and established 400 concurrent connections in 5 threads

Results

Full output of benchmarking TCP/IP

PS C:\Users\xuryan> GrpcGreeterClient.exe -U https://localhost:5001

===================================

Round 1/3

===================================

Running 20s test @ https://localhost:5001 with size 10

5 threads and 400 connections

3093920 requests in 20s, 0.03GB read

Requests/sec: 154696

Average Latency: 2.59ms

Running 20s test @ https://localhost:5001 with size 100

5 threads and 400 connections

3367059 requests in 20s, 0.31GB read

Requests/sec: 168352

Average Latency: 2.38ms

Running 20s test @ https://localhost:5001 with size 1000

5 threads and 400 connections

3064905 requests in 20s, 2.85GB read

Requests/sec: 153245

Average Latency: 2.61ms

Running 20s test @ https://localhost:5001 with size 10000

5 threads and 400 connections

1308877 requests in 20s, 12.19GB read

Requests/sec: 65443

Average Latency: 6.11ms

Running 20s test @ https://localhost:5001 with size 100000

5 threads and 400 connections

330798 requests in 20s, 30.81GB read

Requests/sec: 16539

Average Latency: 24.19ms

Running 20s test @ https://localhost:5001 with size 1000000

5 threads and 400 connections

32037 requests in 20s, 29.84GB read

Requests/sec: 1601

Average Latency: 250.98ms

===================================

Round 2/3

===================================

Running 20s test @ https://localhost:5001 with size 10

5 threads and 400 connections

3360259 requests in 20s, 0.03GB read

Requests/sec: 168012

Average Latency: 2.38ms

Running 20s test @ https://localhost:5001 with size 100

5 threads and 400 connections

3339618 requests in 20s, 0.31GB read

Requests/sec: 166980

Average Latency: 2.40ms

Running 20s test @ https://localhost:5001 with size 1000

5 threads and 400 connections

2998046 requests in 20s, 2.79GB read

Requests/sec: 149902

Average Latency: 2.67ms

Running 20s test @ https://localhost:5001 with size 10000

5 threads and 400 connections

1391264 requests in 20s, 12.96GB read

Requests/sec: 69563

Average Latency: 5.75ms

Running 20s test @ https://localhost:5001 with size 100000

5 threads and 400 connections

331975 requests in 20s, 30.92GB read

Requests/sec: 16598

Average Latency: 24.11ms

Running 20s test @ https://localhost:5001 with size 1000000

5 threads and 400 connections

31251 requests in 20s, 29.10GB read

Requests/sec: 1562

Average Latency: 257.07ms

===================================

Round 3/3

===================================

Running 20s test @ https://localhost:5001 with size 10

5 threads and 400 connections

3367724 requests in 20s, 0.03GB read

Requests/sec: 168386

Average Latency: 2.38ms

Running 20s test @ https://localhost:5001 with size 100

5 threads and 400 connections

3354996 requests in 20s, 0.31GB read

Requests/sec: 167749

Average Latency: 2.38ms

Running 20s test @ https://localhost:5001 with size 1000

5 threads and 400 connections

3001872 requests in 20s, 2.80GB read

Requests/sec: 150093

Average Latency: 2.66ms

Running 20s test @ https://localhost:5001 with size 10000

5 threads and 400 connections

1361260 requests in 20s, 12.68GB read

Requests/sec: 68063

Average Latency: 5.88ms

Running 20s test @ https://localhost:5001 with size 100000

5 threads and 400 connections

320817 requests in 20s, 29.88GB read

Requests/sec: 16040

Average Latency: 24.95ms

Running 20s test @ https://localhost:5001 with size 1000000

5 threads and 400 connections

31752 requests in 20s, 29.57GB read

Requests/sec: 1587

Average Latency: 253.54ms

Final results:

10, 168012, 2.38

100, 167749, 2.38

1000, 150093, 2.66

10000, 68063, 5.88

100000, 16539, 24.19

1000000, 1587, 253.54

PS C:\Users\xuryan>

The value of Url parameter is unix:C:\Users\xuryan\AppData\Local\Temp\greeter.tmp for UDS and pipe:/PipeGreeter for pipe.

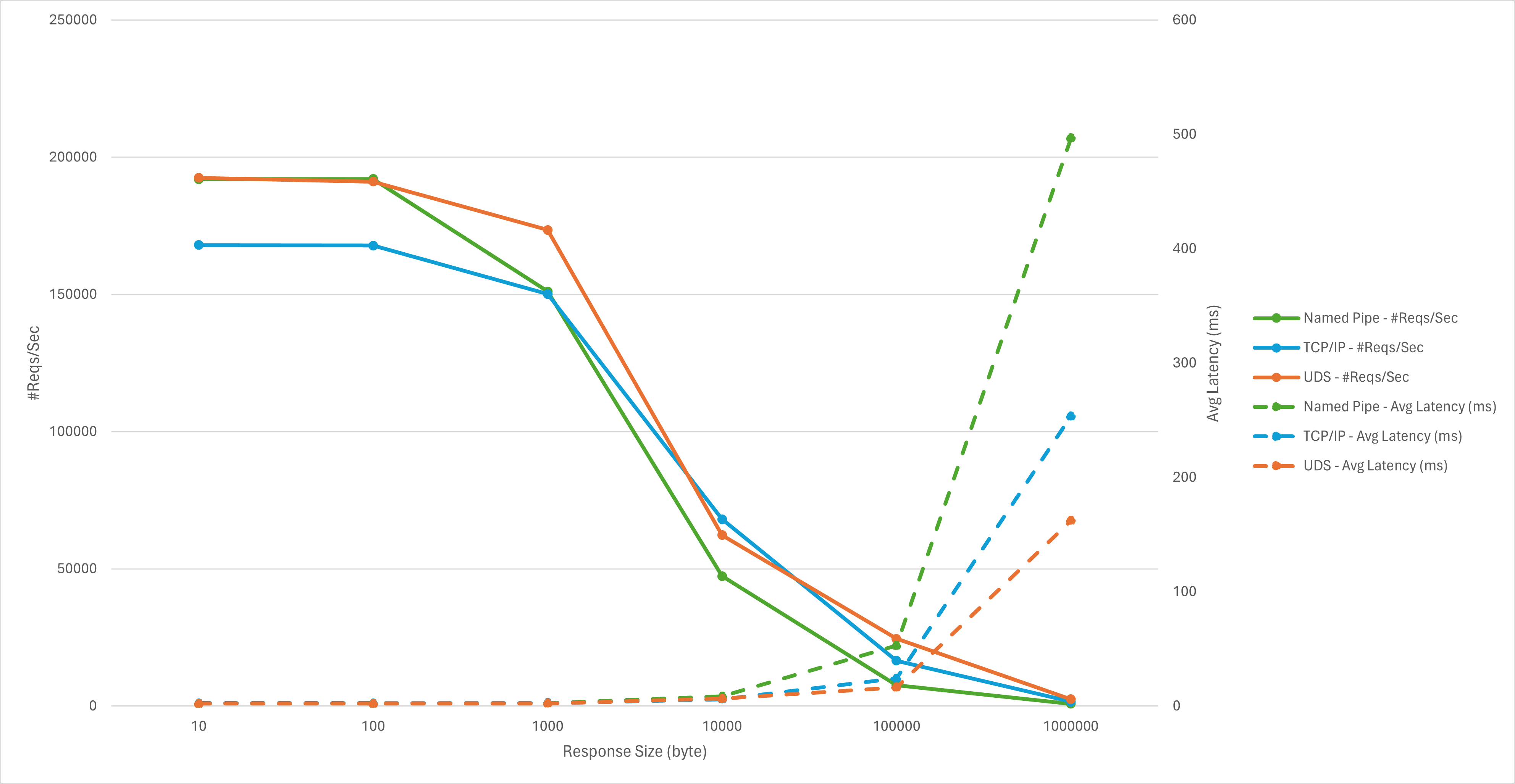

The final results are shown in the table below:

Reqs/Sec

| Response Size (byte) | TCP/IP | UDS | Named Pipe |

|---|---|---|---|

| 10 | 168012 | 192494 | 191990 |

| 100 | 167749 | 191069 | 192055 |

| 1000 | 150093 | 173510 | 151084 |

| 10000 | 68063 | 62253 | 47306 |

| 100000 | 16539 | 24515 | 7565 |

| 1000000 | 1587 | 2472 | 813 |

Avg Latency (ms)

| Response Size (byte) | TCP/IP | UDS | Named Pipe |

|---|---|---|---|

| 10 | 2.38 | 2.08 | 2.08 |

| 100 | 2.38 | 2.09 | 2.08 |

| 1000 | 2.66 | 2.31 | 2.65 |

| 10000 | 5.88 | 6.43 | 8.46 |

| 100000 | 24.19 | 16.32 | 52.92 |

| 1000000 | 253.54 | 162.25 | 496.73 |

Conclusion

UDS has a significant improvement over TCP/IP. Specifically, we observed a rise of around 15% in the Queries Per Second (QPS) when the response is relatively small, and an impressive increase of 50% for responses as large as 100KB. That is, UDS performs much better when we need to transmit large data. Named pipes is as efficient as UDS for small response body but it's getting much worse for large response body. In a word, we should use as UDS as much possible for local calls.